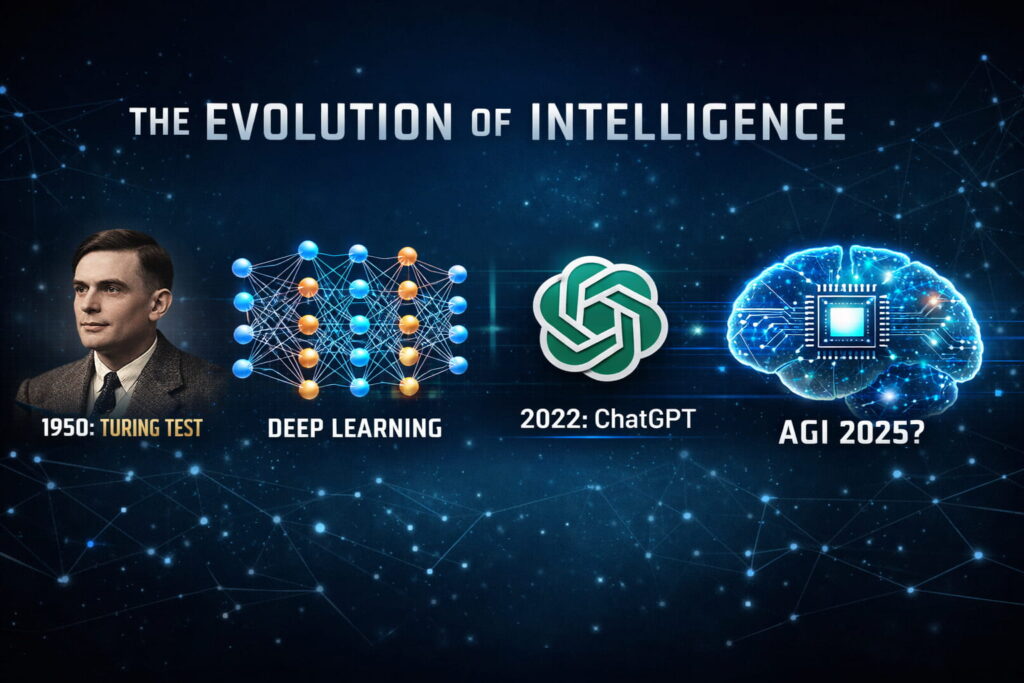

Artificial Intelligence (AI) is one of the most important technologies of our time. But do you know

where it all began? The history of AI dates back to the 1950s, and understanding its journey

can help us appreciate how AI evolved from simple concepts to the advanced systems we use

today.Artificial intelligence didn’t appear overnight. And it didn’t move in a straight line,

either.Today’s AI systems can write, summarize, translate, generate images, and support real

work across industries. 64% of businesses now say that AI is enabling their innovation. These

models might feel novel to a lot of us, but they’re actually built on decades of research,

experimentation, hype cycles, setbacks, and breakthroughs (plus a few moments where the

entire field changed direction).

This article will walk you through AI history in a clear timeline: what happened, when, why it

mattered, and how we got from early theory to the artificial intelligence tools of the modern era.

What is Artificial Intelligence?

Artificial Intelligence (AI) refers to the development of computer systems that can perform tasks

that typically require human intelligence, such as visual perception, speech recognition,

decision-making, and language translation. AI technologies aim to simulate intelligent behavior,

learning from data, adapting to new inputs, and performing tasks with varying degrees of

autonomy

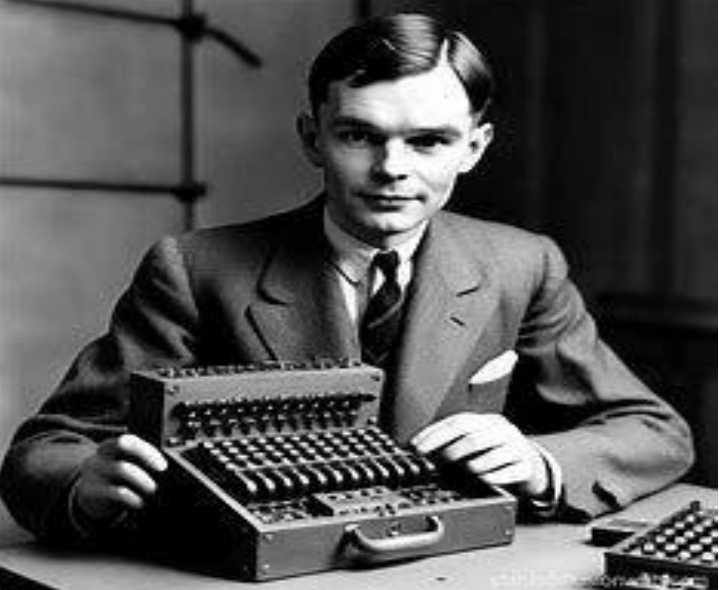

1950— The Alan Turing and the “Turing Test”

In 1950, British scientist Alan Turing introduced a famous question:

“Can machines think?”

He proposed a practical idea: if a machine can convincingly communicate like a human in

conversation.

He also proposed the Turing Test, which is used to measure a machine’s ability to exhibit

intelligent behavior similar to humans.

1956: Dartmouth Conference – The Official Birth of AI

The term “Artificial Intelligence” was officially coined by John McCarthy at the Dartmouth

Conference in 1956. This marked the formal birth of AI as a field of study. Researchers began

exploring how machines could simulate human intelligence.John McCarthy, widely regarded

as the Father of AI, proposed that: “Every aspect of learning or any other feature of intelligence can in principle be so precisely

described that a machine can be made to simulate it.”

1960s–1970s: The First AI Winter – Challenges and Lessons

Despite initial enthusiasm, the 1960s and 1970s saw a period of stagnation in AI research

known as the “first AI winter.” Funding dried up, and progress slowed as challenges in AI

technology became apparent. However, this setback did not deter researchers from continuing

their work behind the scenes.

Despite this, foundational ideas such as symbolic reasoning and problem-solving

algorithms emerged, which guided future research.

1980s–1990s: Expert Systems and the Rise of Machine Learning

AI regained momentum with the development of expert systems that could mimic human

decision-making in specific domains like medicine and business.

During this time, machine learning started to become a practical path, enabling systems to

learn from data rather than being explicitly programmed.

In the 1980s,these systems captured specialist knowledge as rules (often in the form of “if X,

then Y”). They worked in narrow contexts, and they brought AI into real organizations.By the 1990s, machine learning emerged, allowing computers to learn patterns from

data rather than relying solely on pre-defined rules. This was the start of AI moving from

labs into real-world applications.

2000s–2010s: Big Data, Better Computing, and Deep Learning

1. More data: the web, sensors, enterprise systems, and digital behavior created enormous

datasets. The availability of large datasets and improved computing power allowed AI to

move beyond small experiments.

2. More compute: faster hardware made training large models possible.

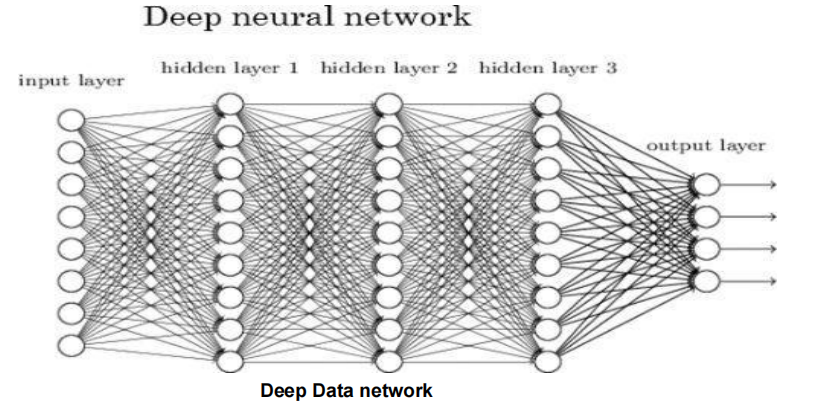

3. Deep learning,a subset of machine learning focused on neural networks, emerged as a

powerful tool for training AI systems to perform complex tasks.

Inspired by the structure of the human brain, enabled breakthroughs in image recognition,

speech processing, and natural language understanding.

How Artificial Intelligence Moved from Research Labs to Real-World

Systems

● Self-driving cars demonstrated autonomous navigation

● AlphaGo defeated human champions in strategic games

● Businesses adopted AI for fraud detection, logistics, and customer supportAs AI technologies advanced, they moved beyond experimental demonstrations into real-world

development and deployment. Researchers and organizations began applying AI to practical

problems across industries, marking a major shift from theory to execution. This transition

highlighted AI’s ability to improve efficiency, productivity, and innovation, accelerating its

adoption in business and technology.

This period proved that AI could deliver measurable value, beyond theory or experiments.

2020: Artificial Intelligence Becomes Mission-Critical

In 2020, Artificial Intelligence (AI) became mission-critical for organizations across industries,

accelerating its adoption to address complex challenges and drive digital transformation. The

COVID-19 pandemic forced businesses to shift rapidly toward remote work and digital

operations, increasing demand for AI solutions that could automate processes, improve

customer experiences, and support new ways of working.

AI-powered data analytics enabled real-time, data-driven decision-making as organizations

dealt with rapidly changing market conditions. In healthcare, AI supported early diagnosis, drug

discovery, resource optimization, and pandemic response efforts. AI also played a key role in

supply chain optimization, helping businesses forecast demand, manage disruptions, and

improve logistics efficiency.

At the same time, AI enhanced customer engagement through chatbots, personalization, and

recommendation systems, while strengthening cybersecurity and fraud detection in a

digital-first environment. Industries such as manufacturing and transportation increasingly

adopted AI-driven automation and robotics to improve efficiency and safety.Overall, 2020 marked a turning point in AI history, where Artificial Intelligence evolved from a

supportive tool into a core driver of resilience, innovation, and long-term business growth.

Biggest Lessons from the History of Artificial Intelligence

One of the most important lessons from the history of Artificial Intelligence (AI) is the need for

realistic expectations and continuous learning. AI’s development has repeatedly moved

through cycles of excitement followed by disappointment, commonly known as AI winters,

when technology failed to meet exaggerated expectations. These periods remind us that

progress in AI is gradual and requires patience.

However, each AI winter was eventually followed by renewed growth, driven by advances in

computing power, algorithms, and data availability. This pattern shows that long-term

success in AI depends on steady investment, research, and a balanced understanding of both

its capabilities and limitations.

The evolution of AI also emphasizes the value of collaboration and interdisciplinary

innovation. Breakthroughs in AI have emerged through the combined efforts of computer

scientists, mathematicians, psychologists, neuroscientists, and domain experts. This

collaborative approach has enabled AI to solve complex real-world problems more effectively.

In conclusion, the history of Artificial Intelligence teaches us that sustainable progress requires

balanced expectations, ethical responsibility, and continuous learning. By applying these

lessons, organizations and societies can unlock AI’s transformative potential while ensuring it is

developed and used for the greater good.

What AI History Means for Organizations Today

The evolution of Artificial Intelligence (AI) offers valuable lessons for organizations aiming to

maximize AI’s potential. Learning from past successes and challenges empowers businesses

to deploy AI strategies that are practical, ethical, and sustainable.

1. Build a Robust Foundation

Invest in data infrastructure, computing power, and AI tools to navigate the complexities of

AI. A strong foundation ensures that AI initiatives are fast, reliable, and adaptable to evolving

business needs.

2.Start Small, Scale Smartly

History shows the value of beginning with pilot programs before scaling AI initiatives. This

approach allows organizations to experiment, learn, and avoid costly mistakes, setting the

stage for impactful expansion.

3. Align AI with Business Goals

AI should solve real business problems rather than exist as a novelty. Focus on projects that

enhance decision-making, drive measurable results, and foster growth. Alignment with

business objectives ensures AI contributes to long-term competitiveness.

4. Uphold Ethical Standards

Ethics, transparency, and fairness are critical as AI becomes pervasive. Organizations must

reduce bias, protect data privacy, and maintain accountability to build trust with customers,

employees, and regulators.

5. Foster Collaboration and Continuous Learning

Organizations that encourage cross-functional collaboration and ongoing learning are

better equipped to innovate. Combining insights from diverse disciplines improves

problem-solving and strengthens AI integration.

6. Prepare for Future AI Trends

With technologies like generative AI, foundation models, and autonomous systems

advancing rapidly, organizations must anticipate trends and adapt proactively.

Forward-looking strategies help businesses stay ahead of competitors.

In Conclusion

By studying the history of AI, organizations can make informed decisions, deploy AI

responsibly, and unlock its full potential to drive innovation, operational efficiency,

and sustained growth. Leveraging these lessons ensures AI becomes a strategic

asset rather than just a technology experiment.